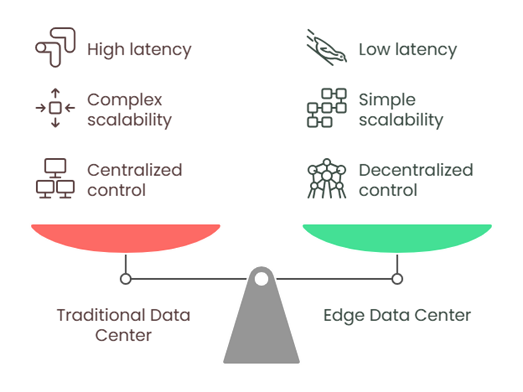

The rise of IoT devices, 5G networks, and applications requiring instant responses—such as smart sensors and autonomous vehicles—has made one thing clear: latency increases with distance. Traditional data centers, though powerful for handling core workloads like large-scale databases, are often located in centralized regional hubs. This creates a challenge for real-time applications that demand minimal delay.

To bridge this gap, edge data centers have emerged as a decentralized alternative, placing computing resources closer to end users. This guide directly compares these two models, examining how location, latency, and scale define their differences.

What Is a Traditional Data Center?

Definition

A traditional data center is a centralized facility housing servers, storage, and networking equipment. These data centers are typically located in regional hubs or company-owned on-premise buildings and are designed to centralize control over computing resources, power, cooling, and security.

Key Features

- Centralized hardware setup

- Long-distance data travel, resulting in higher latency

- High capacity for compute and storage

- Siloed infrastructure components (compute, storage, networking)

- Expensive and time-consuming to scale

Common Use Cases

Enterprises run core workloads—think databases, ERP, or compliance-sensitive apps—in their own halls when they need full control. Cloud and hosting providers deploy the same centralized model to offer infrastructure-as-a-service to customers. Big-data jobs, including Hadoop clusters or AI training that need massive power and cooling, also land on these sites.

What Is an Edge Data Center?

Definition

An edge data center is a smaller, distributed facility placed physically closer to end users or devices generating data. Its primary purpose is to reduce latency and process data locally, rather than routing everything back to a central data hub.

Key Features

- Located at the edge of the network (e.g., cell towers, metro sites)

- Ultra-low latency (single-digit milliseconds)

- Prefabricated or modular for rapid deployment

- Supports localized processing and caching

- Reduces WAN traffic and network bottlenecks

Common Use Cases

An edge data center lets autonomous vehicles analyze sensor feeds in real-time. It also runs smart city cameras, traffic lights, and factory sensors without sending every byte to a far-off cloud. Remote offices use it for local file serving so staff is productive during network hiccups.

Key Differences between Edge and Traditional Data Centers

| Factor | Edge Data Centers | Traditional/Core Data Centers |

| Location & Latency | Deployed at the network edge (on-prem, at cell towers, metro/aggregation sites) so that round-trip latency can stay in the single-digit-millisecond range. | Concentrated in a few regional or hyperscale campuses, forcing traffic to traverse longer WAN paths—50 ms or more for distant users[1]. |

| Scalability & Deployment Tempo | Prefabricated / modular designs allow “plug-and-play” installs that can be racked, stacked and online in weeks, and then scaled one micro-site at a time. | New halls require land acquisition, multi-year construction (≈ 3-6 years end-to-end)[2], and large capital outlays before the first workload can land. |

| Use-Case Fit | Optimized for real-time and bandwidth-intensive workloads—IoT telemetry, autonomous vehicles, AR/VR, smart-grid, vRAN, cloud gaming, etc.—where every millisecond and local data processing matter. | Ideal for high-throughput, non-latency-sensitive jobs: big-data analytics, archival storage, batch processing, ERP, and conventional web/app hosting. |

Why Edge Data Centers Are the Future

Edge computing isn’t just a buzzword, it’s a response to real-world digital transformation demands. The increasing need for speed, responsiveness, and localized processing is driving a shift from traditional models to hybrid architectures that blend edge and core data center environments.

From 5G rollouts to smart grid optimization, edge data centers enable organizations to process, analyze, and act on data – instantly and securely.

Be Part of the Edge Revolution with Data Center Asia 2025

At Data Center Asia 2025, we’re involved in the edge revolution, understanding that edge data centers will play a key role in transforming digital infrastructure throughout Asia-Pacific.

Our flagship events in Hong Kong, Kuala Lumpur, and Jakarta, we aim to combine edge computing data centers with core systems, highlighting tools that make operations more unified.

Specifically, we include next-gen security measures, including AI surveillance, to secure distributed networks. We organize talks on sustainability and new cooling solutions, which help us solve the issues of building network edge data centers in many environments.

Register now to find solutions that are tried and trusted, secure, and efficient as digital transformation advances!

Reference

- Edge Data Centres: A Comprehensive Guide. Available at: https://stlpartners.com/articles/edge-computing/edge-data-centres/ (Accessed: 9th, June)

- How Long Does It Take to Develop a Data Center? A Step-by-Step Timeline. Available at: https://www.avisenlegal.com/how-long-does-it-take-to-develop-a-data-center-a-step-by-step-timeline/ (Accessed: 9th, June)